It is often needed to share data among the jobs in GitLab CI pipelines. Artifacts (and maybe Cache, too) is the utility that GitLab introduces for this purpose.

So, what if this data is sensitive, and we want it not to be easily accessible?

By ‘accessible’, I mean storing the plain data in the disk or letting the plain data be obtained outside the pipeline execution without restriction.

Consider the following definition:

image: alpine:latest

stages:

- create-sensitive-data

- use-sensitive-data

create-sensitive-data:

stage: create-sensitive-data

script:

- echo 'this is a sensitive data' > sensitive.txt

artifacts:

paths:

- sensitive.txt

use-sensitive-data:

stage: use-sensitive-data

needs:

- job: create-sensitive-data

artifacts: true

script:

- cat sensitive.txt

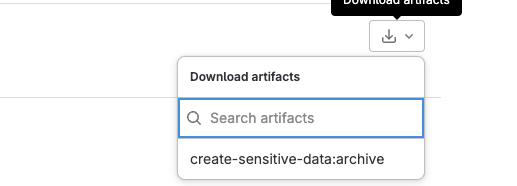

We can pass any data -including sensitive ones- by using artifacts, but it doesn’t seem secure. Artifacts are easily accessible from the pipeline using Gitlab web GUI

We can consider setting expiry to the artifacts in days, but it doesn’t sound secure to keep the sensitive data accessible longer than needed.

The basic idea as a possible solution is to store the sensitive data in the encrypted format, decrypt it when needed, and clean it up at the end.

Encrypt Artifacts

The first step to secure the sensitive data in artifacts is to encrypt it. The tool we will use for this purpose is GnuPG (a.k.a GPG).

GPG is an implementation of the OpenPGP standard as defined by RFC4880 (a.k.a PGP) that enables encryption and signing of data and features a versatile key management system.

A file can be encrypted using symmetric encryption with GPG

gpg --symmetric --batch --yes --passphrase <passphrase> -o sensitive.txt.gpg -c sensitive.txt

and can be decrypted

echo <passphrase> | gpg --batch --always-trust --yes --passphrase-fd 0 -d -o sensitive.txt sensitive.txt.gpg

(PS: I won’t go into the details of GPG here)

We can add those commands into the pipeline as follows:

image: alpine:latest

stages:

- create-sensitive-data

- use-sensitive-data

create-sensitive-data:

stage: create-sensitive-data

before_script:

- apk add gnupg

script:

- echo 'this is a sensitive data' > sensitive.txt

after_script:

- gpg --symmetric --batch --yes --passphrase $ARTIFACT_ENCRYPTION_PASSPHRASE -o sensitive.txt.gpg -c sensitive.txt

artifacts:

paths:

- sensitive.txt.gpg

use-sensitive-data:

stage: use-sensitive-data

needs:

- job: create-sensitive-data

artifacts: true

before_script:

- apk add gnupg

- echo $ARTIFACT_ENCRYPTION_PASSPHRASE | gpg --batch --always-trust --yes --passphrase-fd 0 -d -o sensitive.txt sensitive.txt.gpg

script:

- cat sensitive.txt

where ARTIFACT_ENCRYPTION_PASSPHRASE is the symmetric encryption key and can be set in CD/CI variables.

Now, even though the artifacts are stored on the disk and downloadable, they are encrypted and not accessible unless they are decrypted.

Cleanup Artifacts

Even though it is encrypted, it still feels insecure to keep it on the disk and downloadable longer than needed. Now, we can clean up the artifacts once we’re done with them. So we will add a cleanup step to remove them.

In order to do that, we can use GitLab API. There are several methods to authenticate, but I prefer using Personal Access Token. I will set it as GITLAB_CI_TOKENin CD/CI variables.

We want to delete the artifacts of the job that creates the sensitive data. So we need to find it first

JOBS=$(curl --request GET --header "PRIVATE-TOKEN: $GITLAB_CI_TOKEN" "$CI_API_V4_URL/projects/$CI_PROJECT_ID/pipelines/$CI_PIPELINE_ID/jobs")

JOB_ID=$(echo $JOBS | jq 'map(select(.name == "create-sensitive-data"))[0].id')

where CI_API_V4_URL , CI_PROJECT_ID , CI_PIPELINE_ID are predefined GitLab variables that we can use to compose the full path of the required API call.

Here we first get all jobs in the running pipeline with GitLab API, then find the job that creates the sensitive data inside the response using jq and the job name, which is create-sensitive-data in our example.

Now, we have the job id of create-sensitive-data and can delete the artifacts of this job using GitLab API again:

curl --request DELETE --header "PRIVATE-TOKEN: $GITLAB_CI_TOKEN" "$CI_API_V4_URL/projects/$CI_PROJECT_ID/jobs/$JOB_ID/artifacts"

They can be combined in the pipeline definition:

image: alpine:latest

stages:

- create-sensitive-data

- use-sensitive-data

- cleanup-sensitive-data

create-sensitive-data:

stage: create-sensitive-data

before_script:

- apk add gnupg

script:

- echo 'this is a sensitive data' > sensitive.txt

after_script:

- gpg --symmetric --batch --yes --passphrase $ARTIFACT_ENCRYPTION_PASSPHRASE -o sensitive.txt.gpg -c sensitive.txt

artifacts:

paths:

- sensitive.txt.gpg

use-sensitive-data:

stage: use-sensitive-data

needs:

- job: create-sensitive-data

artifacts: true

before_script:

- apk add gnupg

- echo $ARTIFACT_ENCRYPTION_PASSPHRASE | gpg --batch --always-trust --yes --passphrase-fd 0 -d -o sensitive.txt sensitive.txt.gpg

script:

- cat sensitive.txt

cleanup-sensitive-data:

stage: cleanup-sensitive-data

before_script:

- apk add curl jq

script:

- 'JOBS=$(curl --request GET --header "PRIVATE-TOKEN: $GITLAB_CI_TOKEN" "$CI_API_V4_URL/projects/$CI_PROJECT_ID/pipelines/$CI_PIPELINE_ID/jobs")'

- JOB_ID=$(echo $JOBS | jq 'map(select(.name == "create-sensitive-data"))[0].id')

- 'curl --request DELETE --header "PRIVATE-TOKEN: $GITLAB_CI_TOKEN" "$CI_API_V4_URL/projects/$CI_PROJECT_ID/jobs/$JOB_ID/artifacts"'

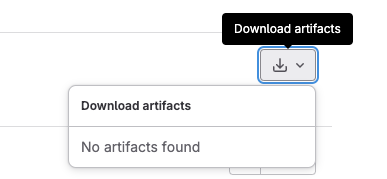

Now, we will see no downloadable artifact in GitLab Web GUI once the pipeline execution is done.

What is further?

We may need to store the sensitive data in multiple files instead of a single file. In that case, we can compress them before the encryption, and uncompress them after the decryption.

...

create-sensitive-data:

stage: create-sensitive-data

before_script:

- apk add gnupg

script:

- echo 'this is a sensitive data' > sensitive1.txt

- echo 'this is another sensitive data' > sensitive2.txt

after_script:

- tar -czvf sensitive.tar.gz sensitive1.txt sensitive2.txt # compress files

- gpg --symmetric --batch --yes --passphrase $ARTIFACT_ENCRYPTION_PASSPHRASE -o sensitive.tar.gz.gpg -c sensitive.tar.gz

artifacts:

paths:

- sensitive.tar.gz.gpg

use-sensitive-data:

stage: use-sensitive-data

needs:

- job: create-sensitive-data

artifacts: true

before_script:

- apk add gnupg

-echo$ARTIFACT_ENCRYPTION_PASSPHRASE|gpg--batch--always-trust--yes--passphrase-fd0-d-osensitive.tar.gzsensitive.tar.gz.gpg

- tar -xzvf sensitive.tar.gz # extract files

script:

- cat sensitive1.txt

- cat sensitive2.txt

...

Why not Cache but Artifacts?

GitLab Cache is another option to share data between jobs (even though its main purpose is not that according to GitLab doc)

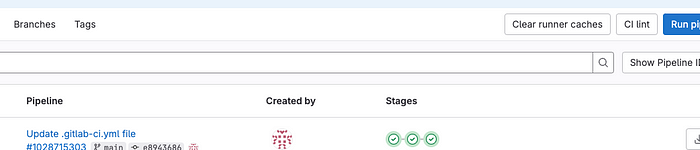

Its main advantage in terms of accessibility and security is that it is not downloadable from web GUI. But it doesn’t mean it is not stored somewhere in the runner’s disk. And there is no automated way to clear the cache via API (Let me know if I’m wrong). It can be cleared from the web GUI in the pipelines page via the button Clear Runner Caches manually.

That’s why I would prefer using artifacts.

This is the solution that I’ve come up with until GitLab introduces built-in support for sharing secret data among the jobs. Thanks for reading. Hope it helps.